PDF) Incorporating representation learning and multihead attention

Por um escritor misterioso

Last updated 26 abril 2025

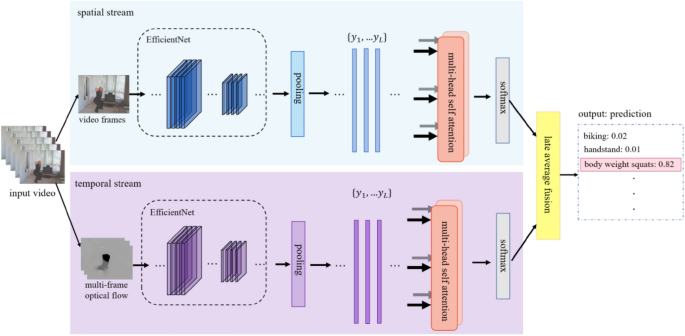

Multi-head attention-based two-stream EfficientNet for action recognition

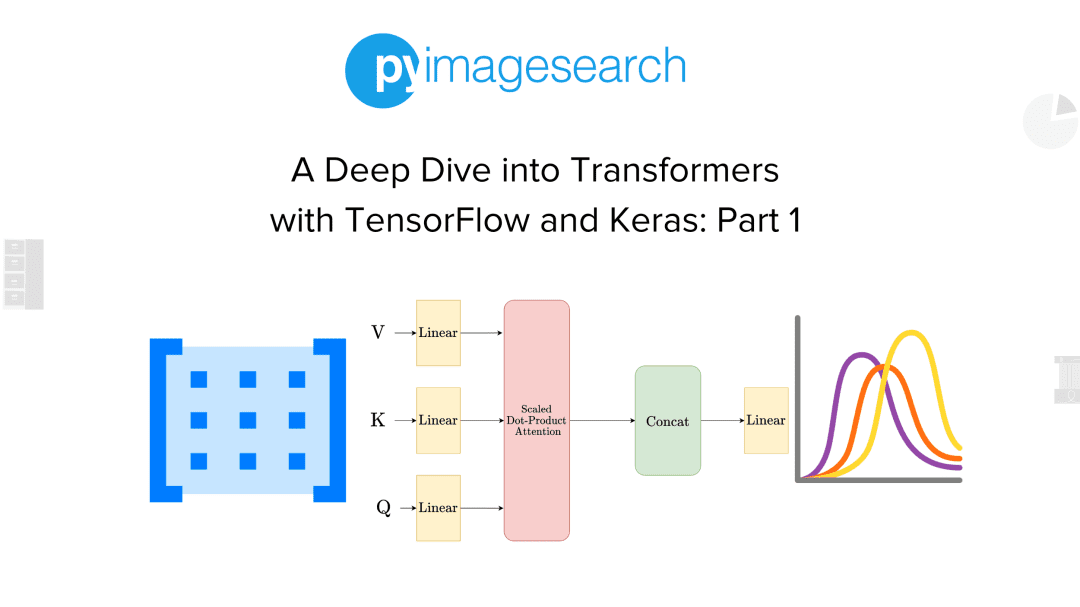

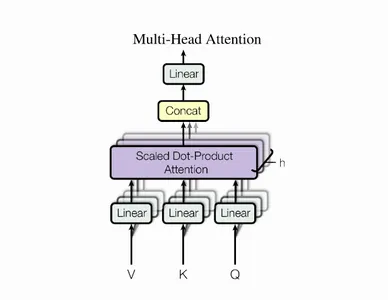

How to Implement Multi-Head Attention from Scratch in TensorFlow and Keras

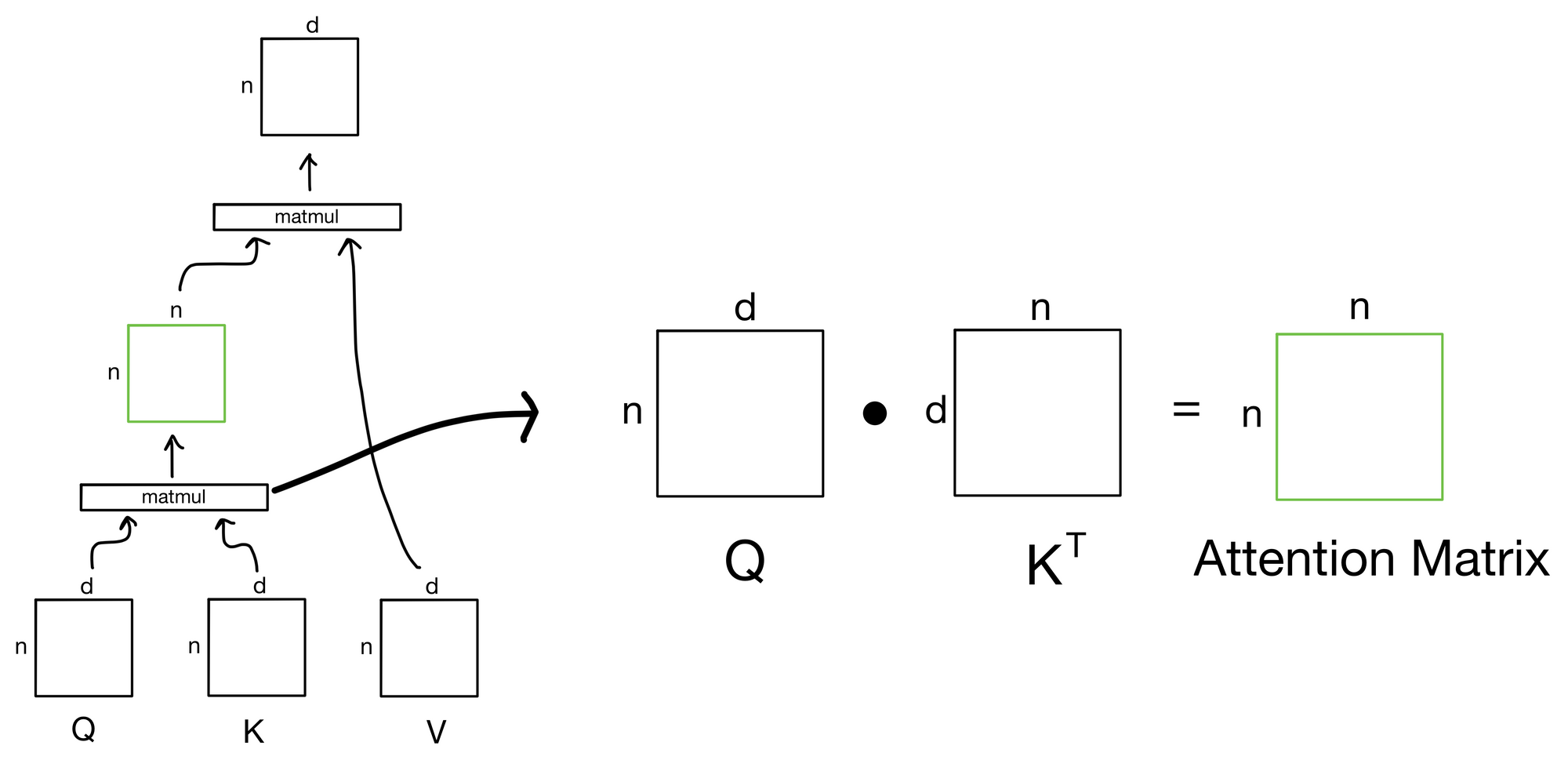

Explained: Multi-head Attention (Part 1)

Analysis of the mixed teaching of college physical education based on the health big data and blockchain technology [PeerJ]

PDF] Interpretable Multi-Head Self-Attention Architecture for Sarcasm Detection in Social Media

Mockingjay: Unsupervised Speech Representation Learning with Deep Bidirectional Transformer Encoders – arXiv Vanity

RNN with Multi-Head Attention

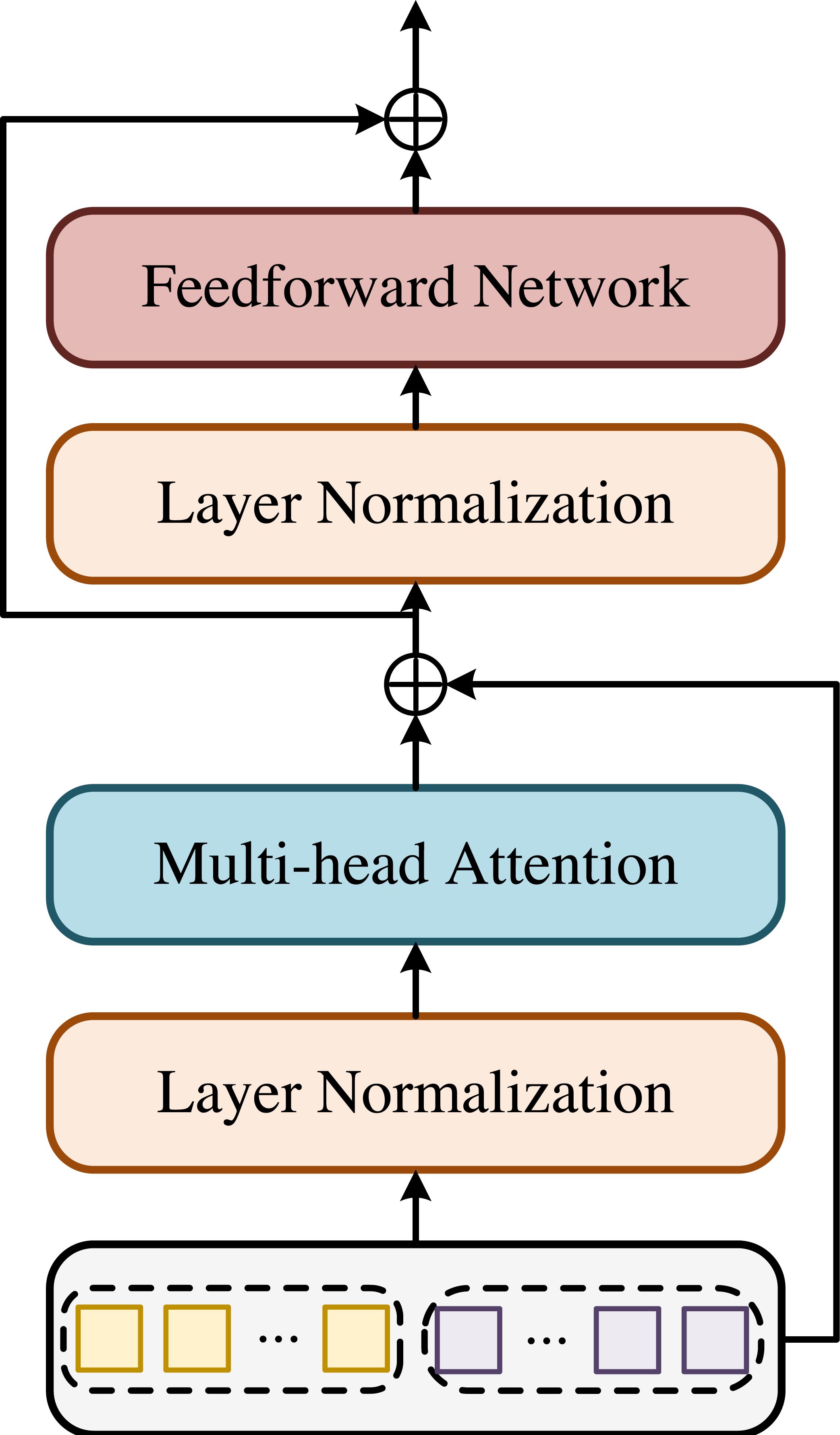

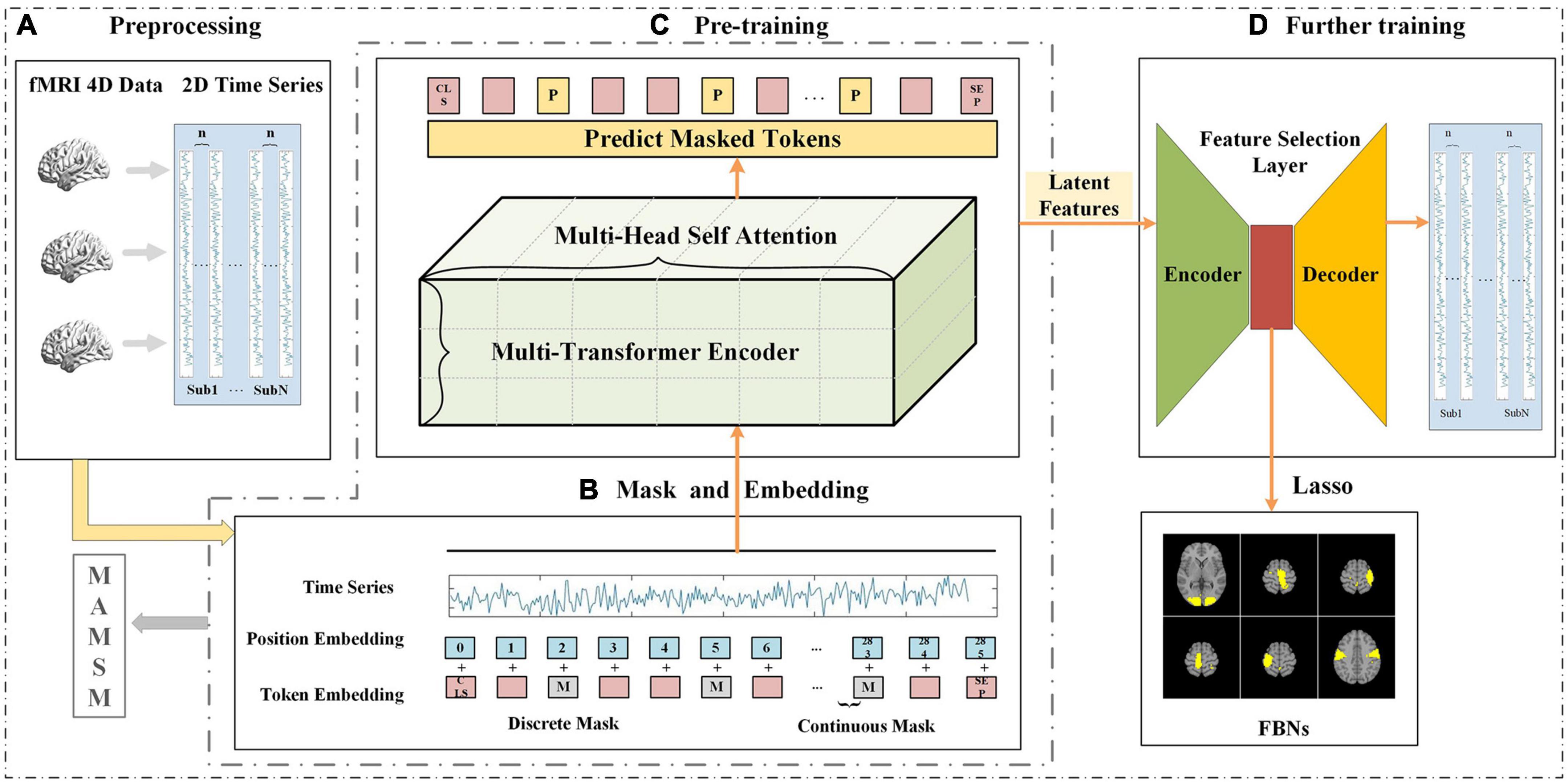

Frontiers Multi-head attention-based masked sequence model for mapping functional brain networks

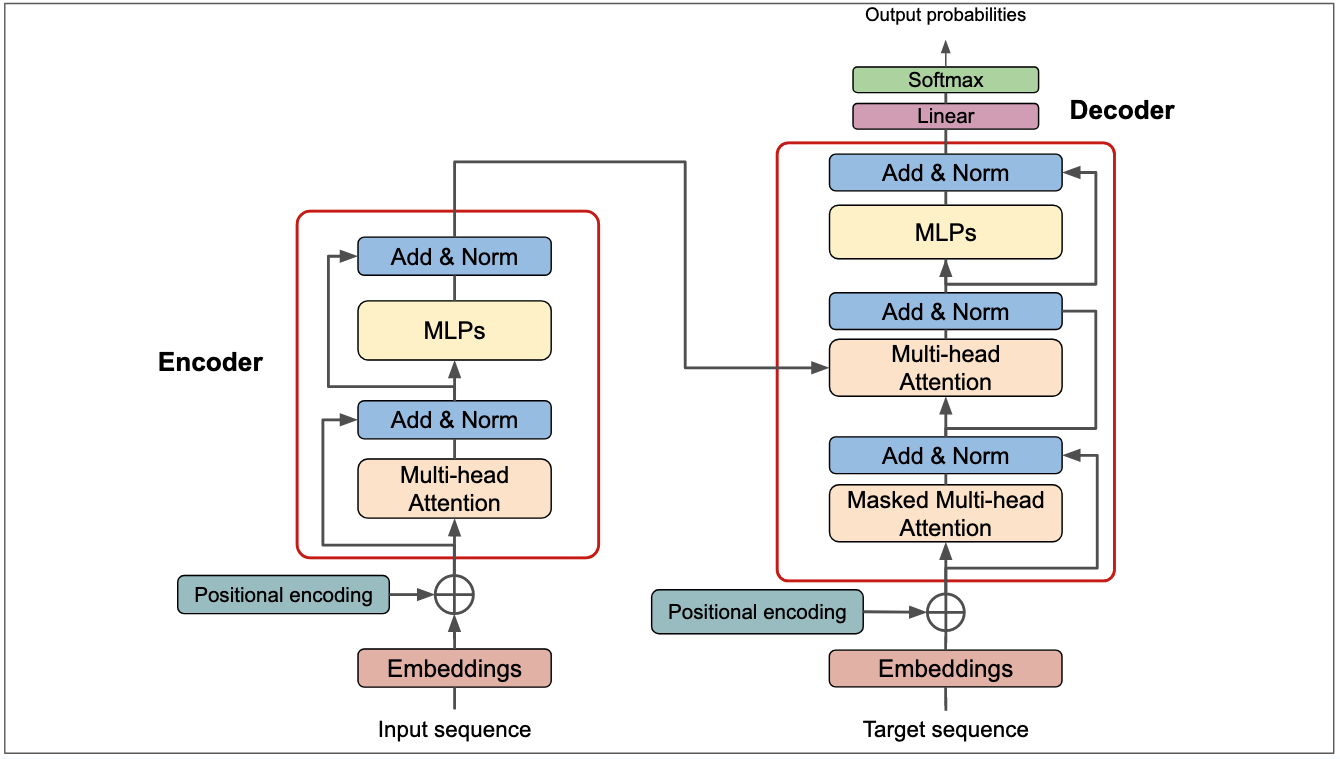

A Deep Dive into Transformers with TensorFlow and Keras: Part 1 - PyImageSearch

AI Research Blog - The Transformer Blueprint: A Holistic Guide to the Transformer Neural Network Architecture

Understanding Attention Mechanisms Using Multi-Head Attention

PDF) Multi-Head Attention with Diversity for Learning Grounded Multilingual Multimodal Representations