Computing GPU memory bandwidth with Deep Learning Benchmarks

Por um escritor misterioso

Last updated 26 abril 2025

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

Benchmarking Large Language Models on NVIDIA H100 GPUs with

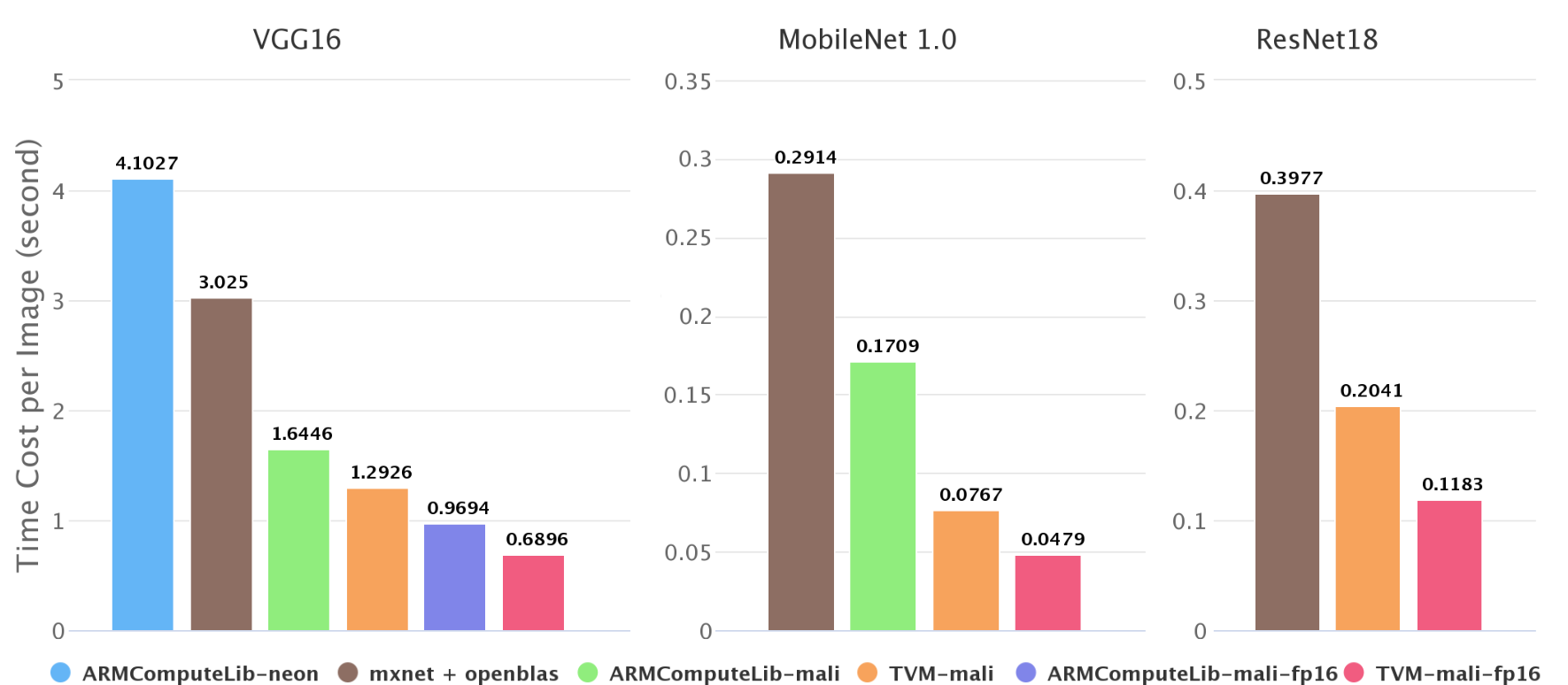

Optimizing Mobile Deep Learning on ARM GPU with TVM

Hardware Recommendations for Machine Learning / AI

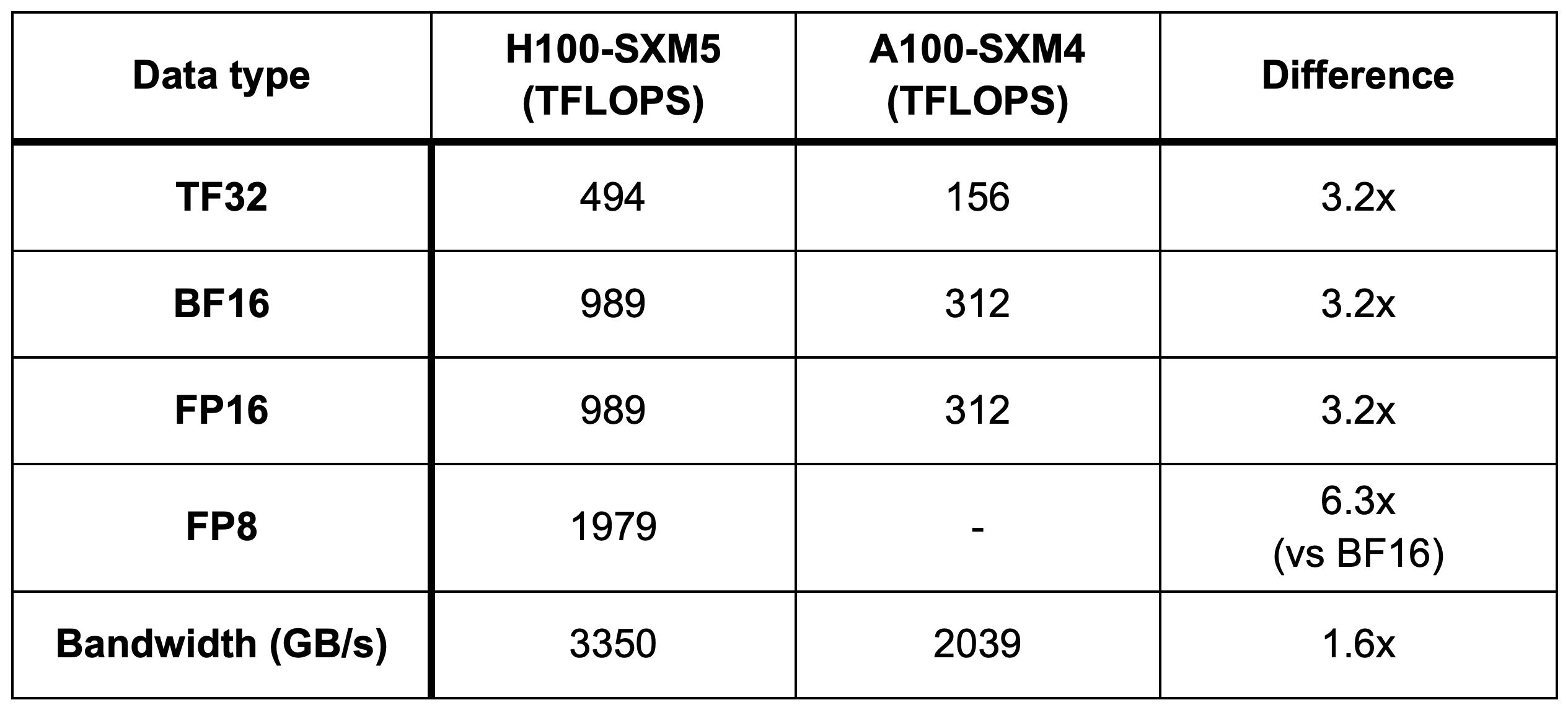

Peak performance and memory bandwidth of multiple NVIDIA GPU

NVIDIA A100 AI and High Performance Computing - Leadtek

ZeRO-2 & DeepSpeed: Shattering barriers of deep learning speed

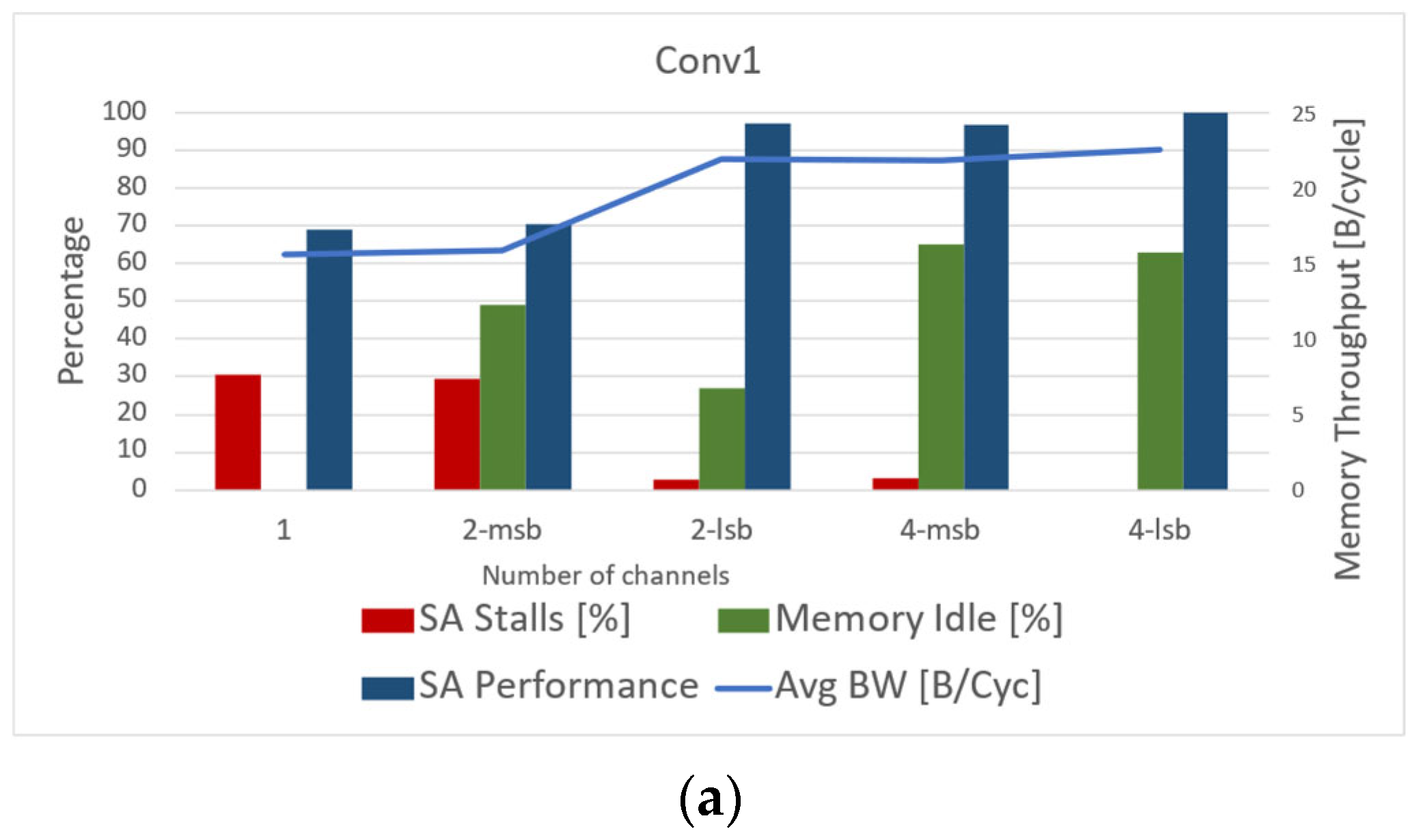

Mathematics, Free Full-Text

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs

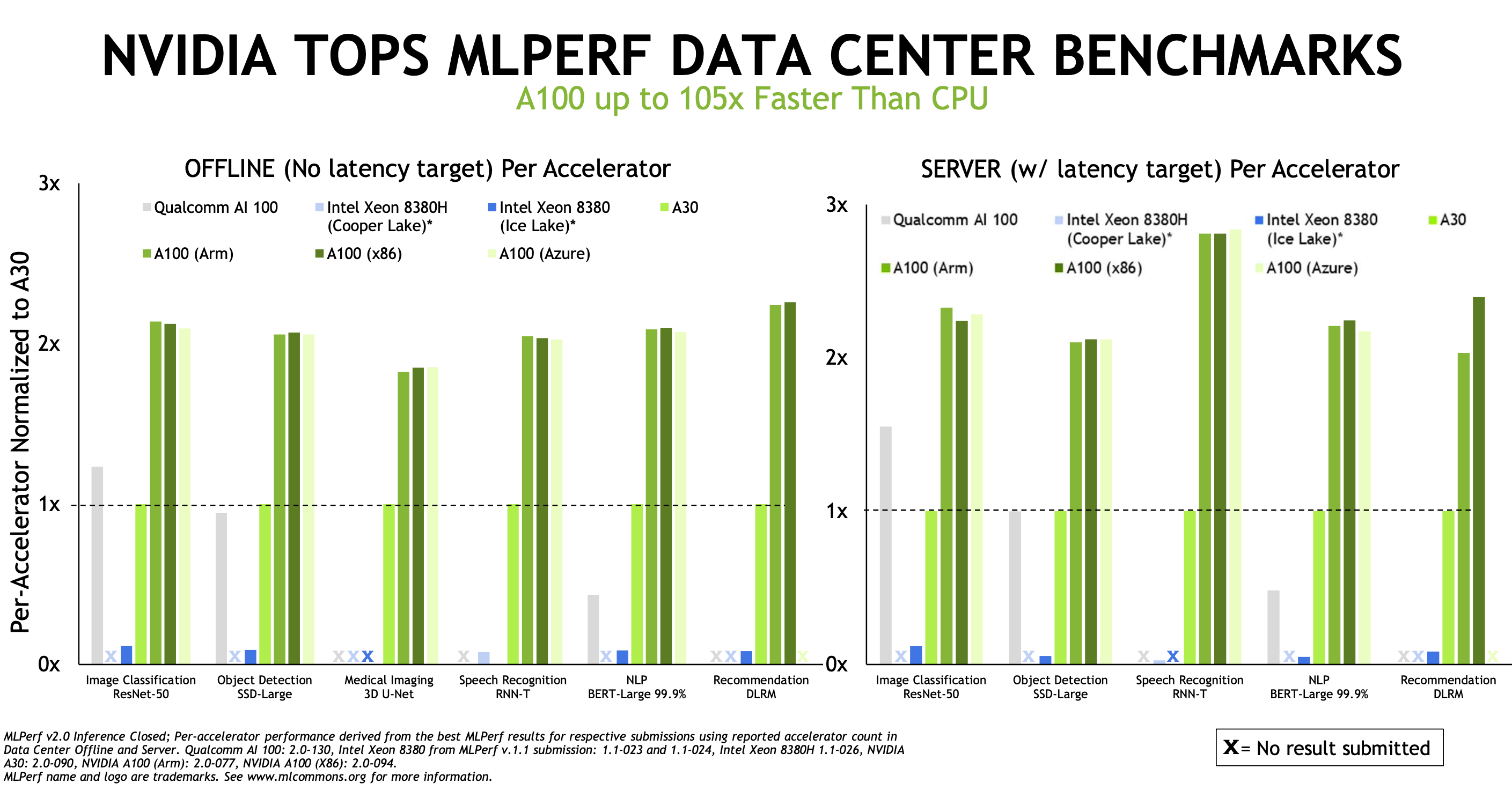

Nvidia Dominates MLPerf Inference, Qualcomm also Shines, Where's

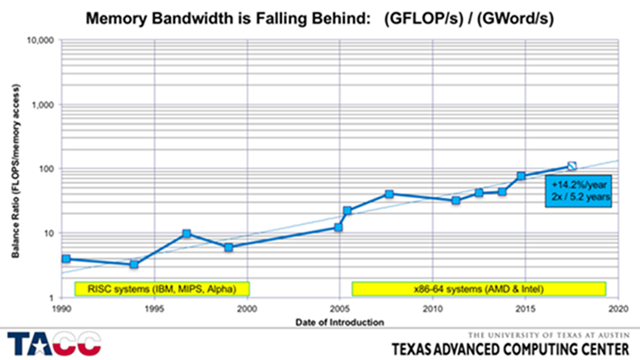

How High-Bandwidth Memory Will Break Performance Bottlenecks - The

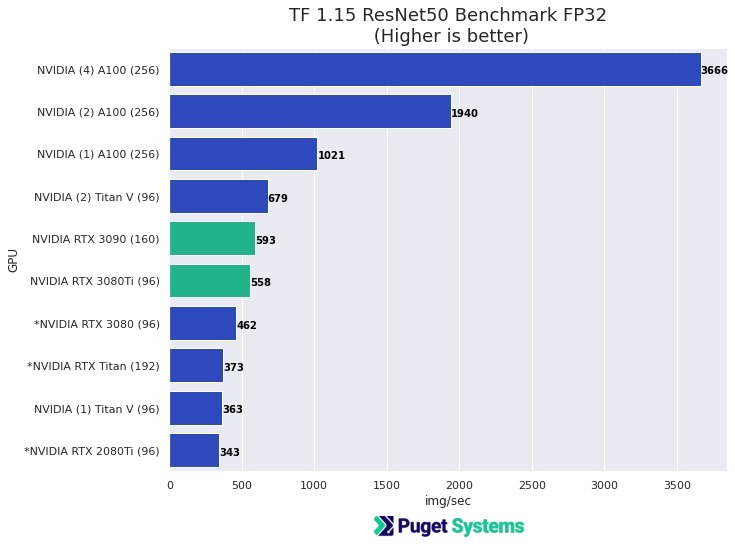

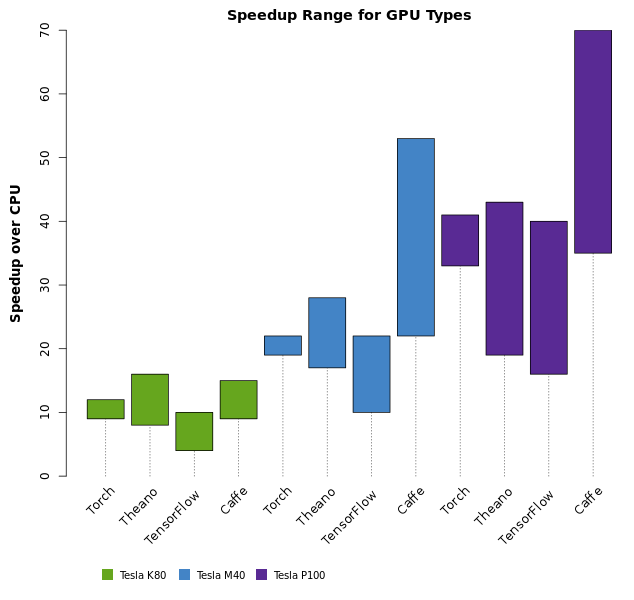

Deep Learning Benchmarks of NVIDIA Tesla P100 PCIe, Tesla K80, and

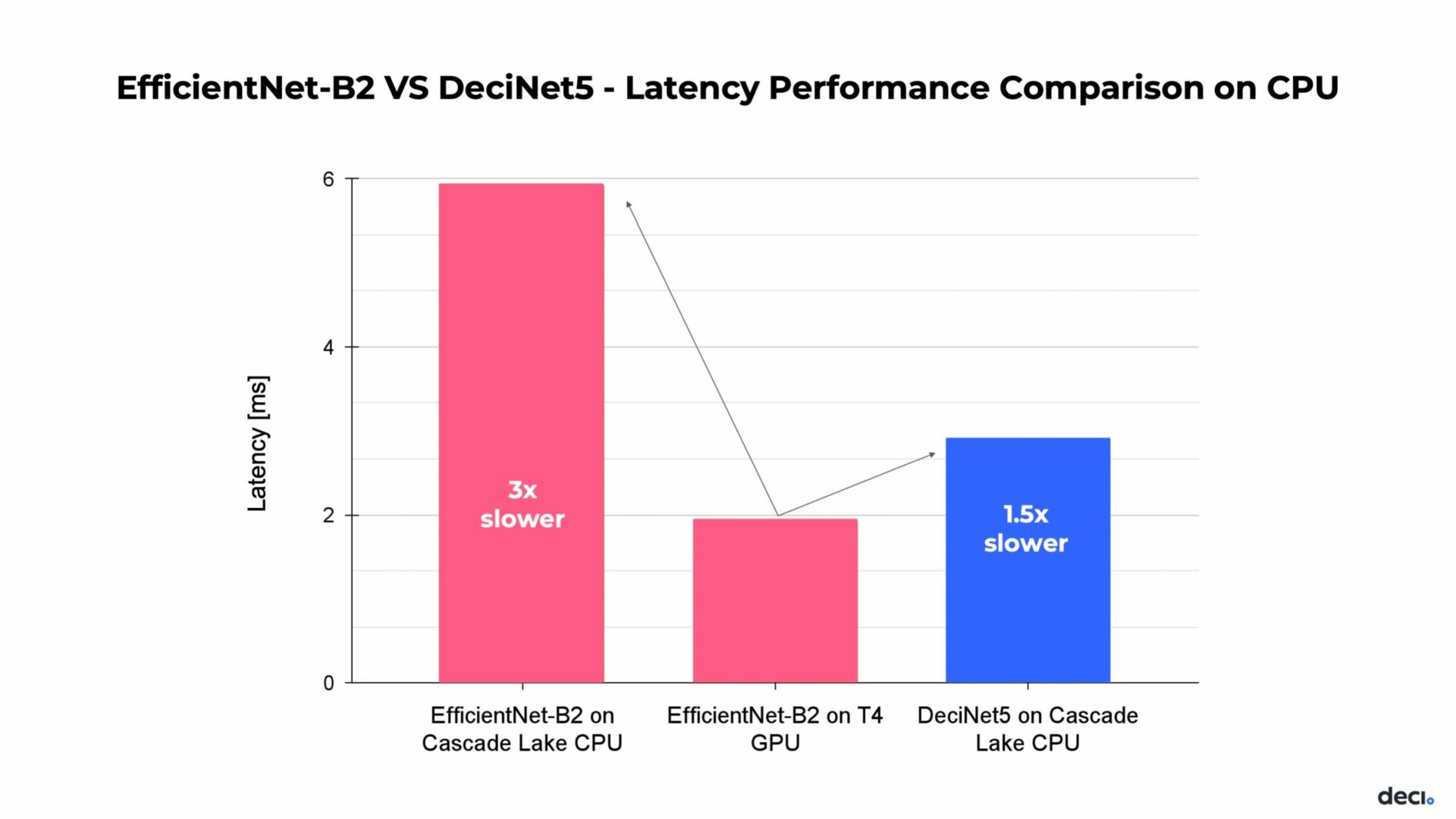

Can You Close the Performance Gap Between GPU and CPU for Deep

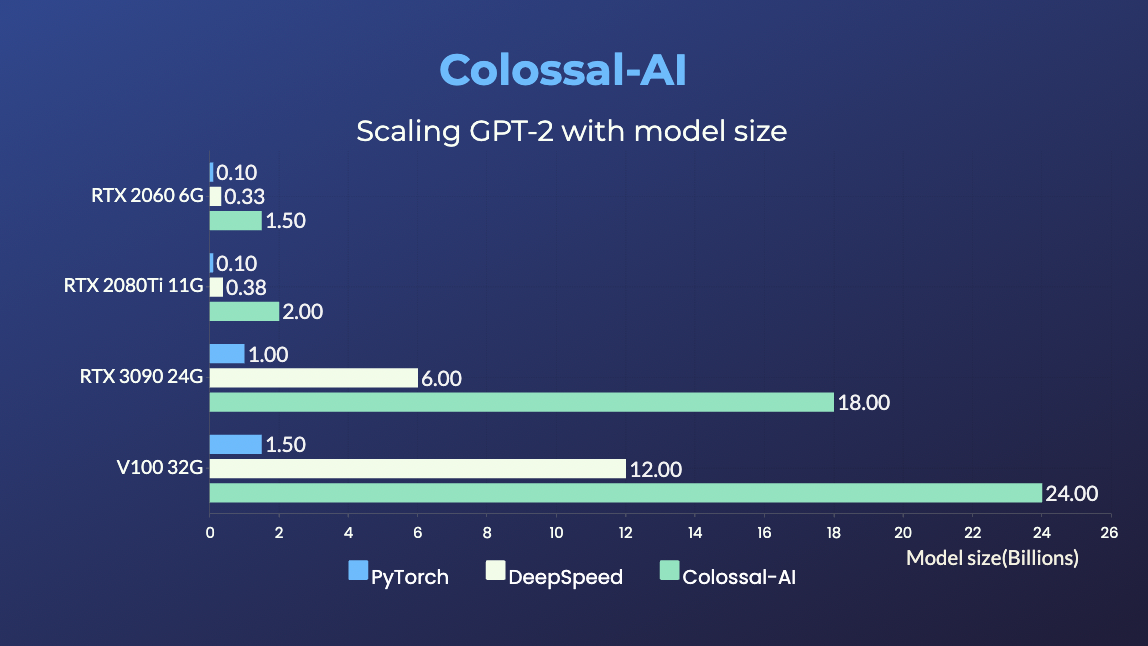

Train 18-billion-parameter GPT models with a single GPU on your

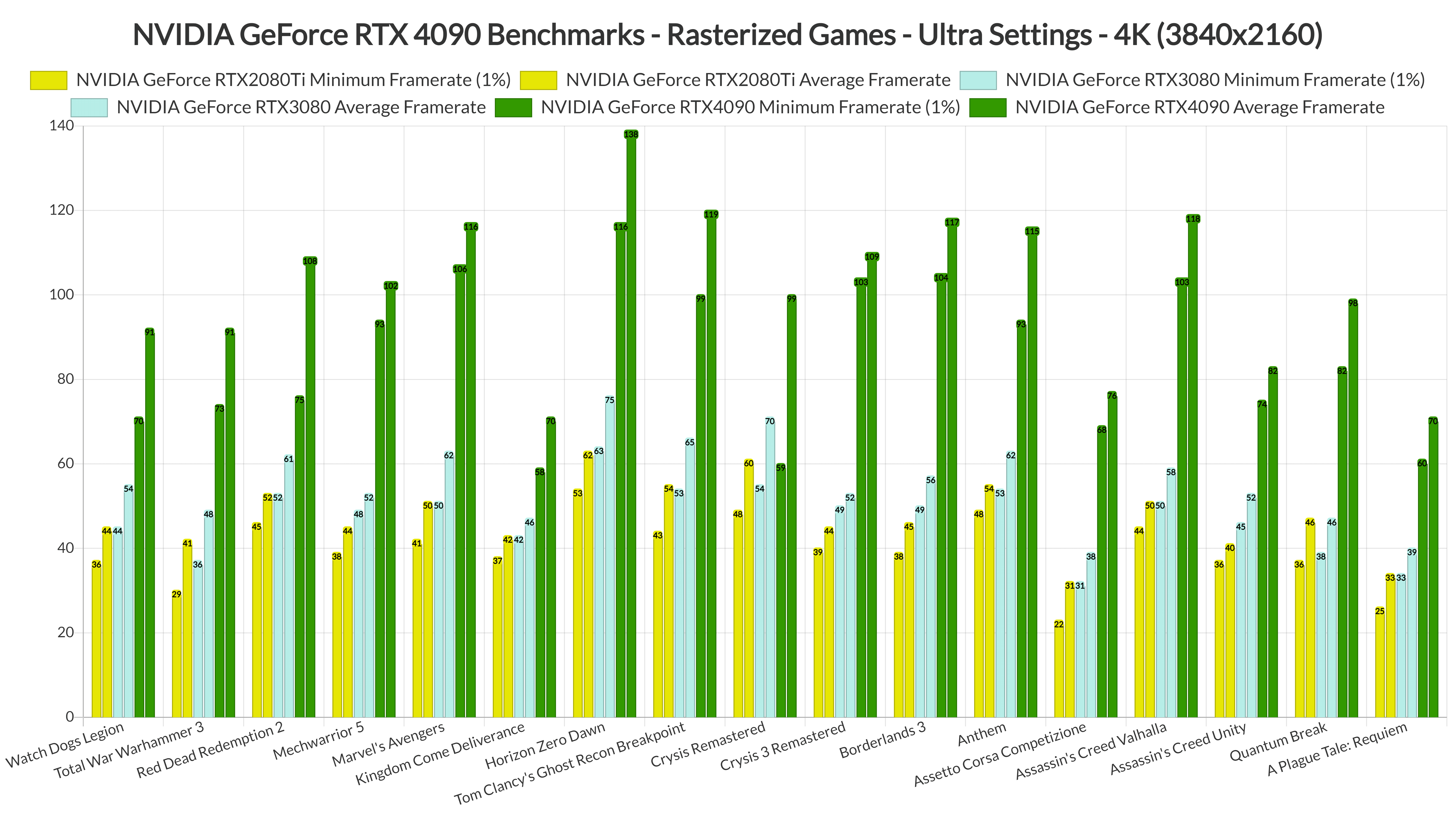

NVIDIA GeForce RTX 4090 vs RTX 3090 Deep Learning Benchmark