RuntimeError: CUDA out of memory. Tried to allocate - Can I solve this problem? - windows - PyTorch Forums

Por um escritor misterioso

Last updated 26 abril 2025

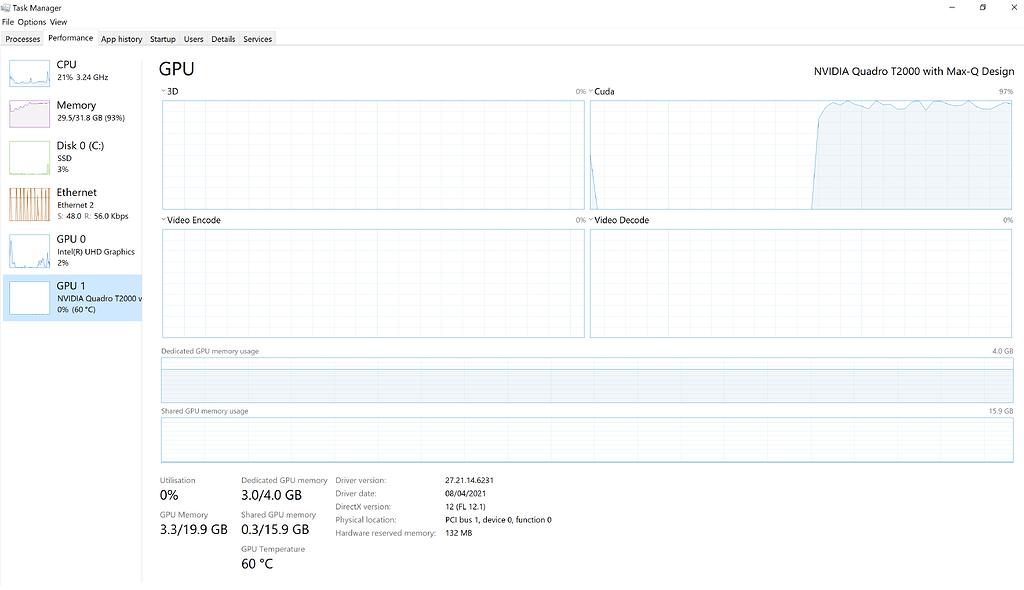

Hello everyone. I am trying to make CUDA work on open AI whisper release. My current setup works just fine with CPU and I use medium.en model I have installed CUDA-enabled Pytorch on Windows 10 computer however when I try speech-to-text decoding with CUDA enabled it fails due to ram error RuntimeError: CUDA out of memory. Tried to allocate 70.00 MiB (GPU 0; 4.00 GiB total capacity; 2.87 GiB already allocated; 0 bytes free; 2.88 GiB reserved in total by PyTorch) If reserved memory is >> allo

Help! RuntimeError: CUDA out of memory. Tried to allocate 20.00

python - Getting Cuda Out of Memory while running Longformer Model

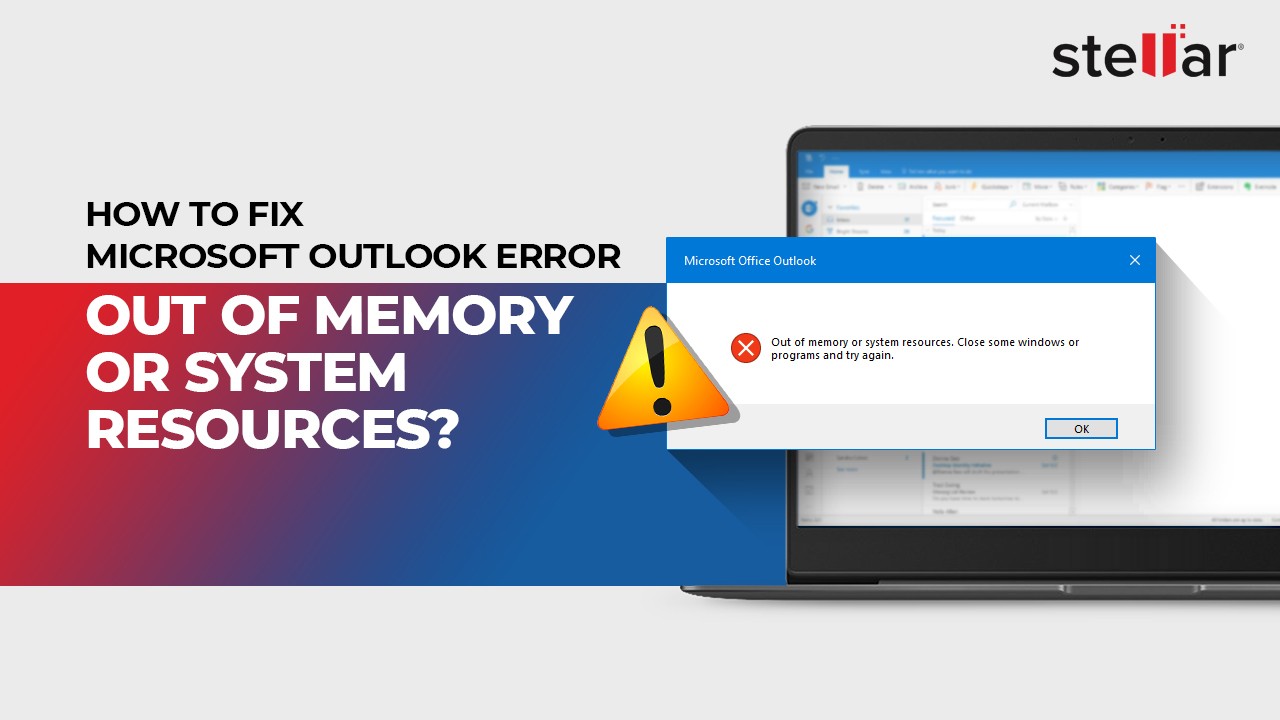

Solving the “RuntimeError: CUDA Out of memory” error

anon8231489123/gpt4-x-alpaca-13b-native-4bit-128g · out of memory

CUDA utilization - PyTorch Forums

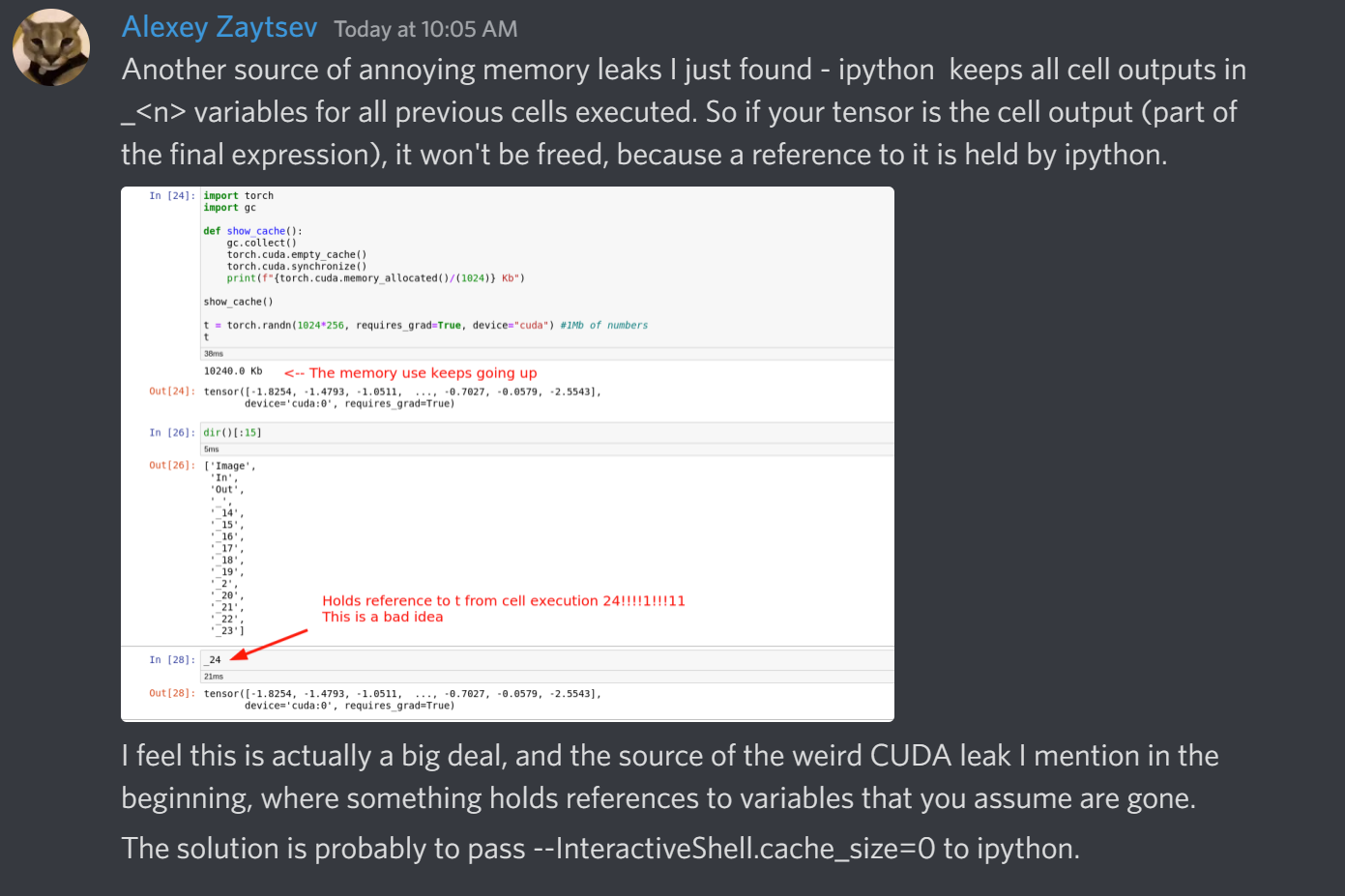

Jupyter+pytorch, or cuda memory help: stop notebook mid training

Updated) NVIDIA RTX A6000 INCOMPATIBLE WITH PYTORCH - windows

CUDA out of memory. Tried to allocate : r/pytorch

CUDA out of memory · Issue #1699 · ultralytics/yolov3 · GitHub

GPU memory is empty, but CUDA out of memory error occurs - CUDA

help ! RuntimeError: CUDA out of memory. Tried to allocate 1.50

What is PyTorch? Introduction to PyTorch

/fptshop.com.vn/uploads/images/2015/Tin-Tuc/BinhNT57/thang6/15thang6/top-game-offline-hay-cho-iphone-nhieu-nguoi-choi-nhat-hien-nay-1.jpg)