AGI Alignment Experiments: Foundation vs INSTRUCT, various Agent

Por um escritor misterioso

Last updated 26 abril 2025

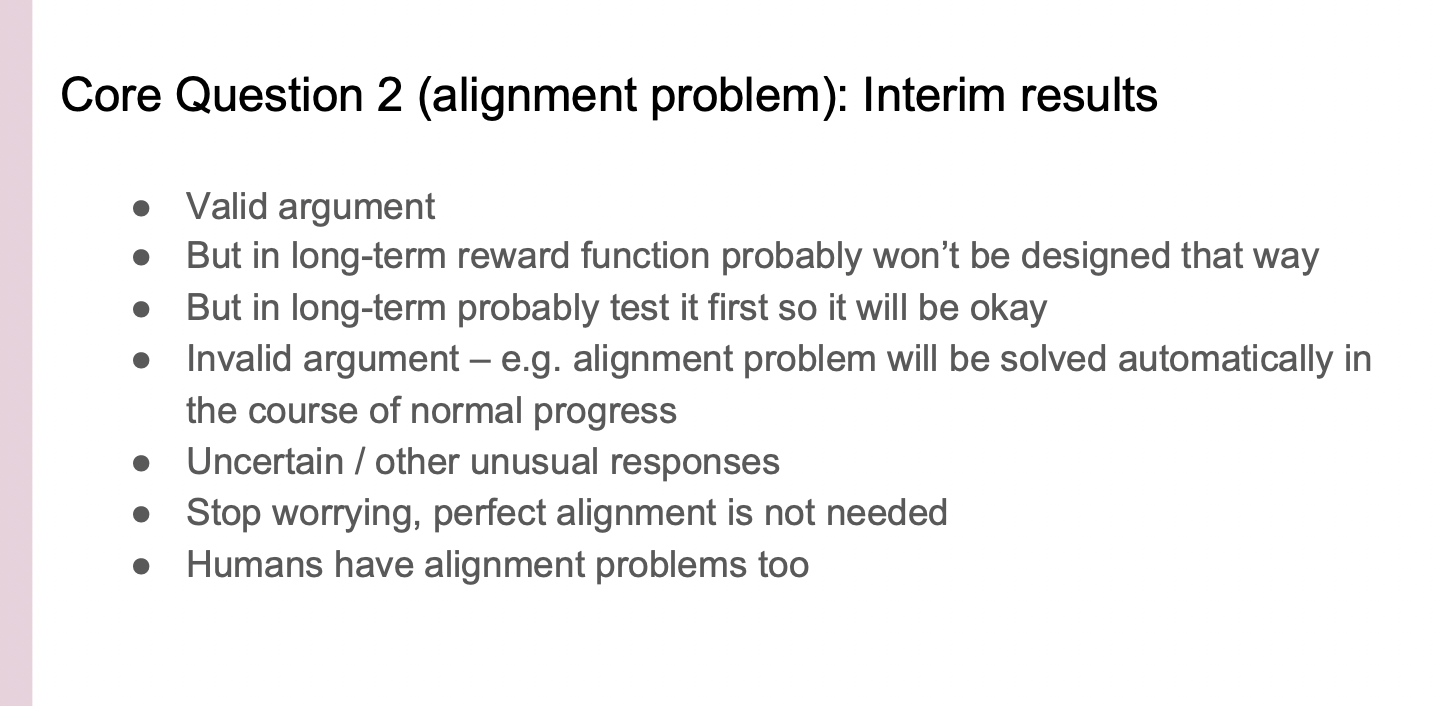

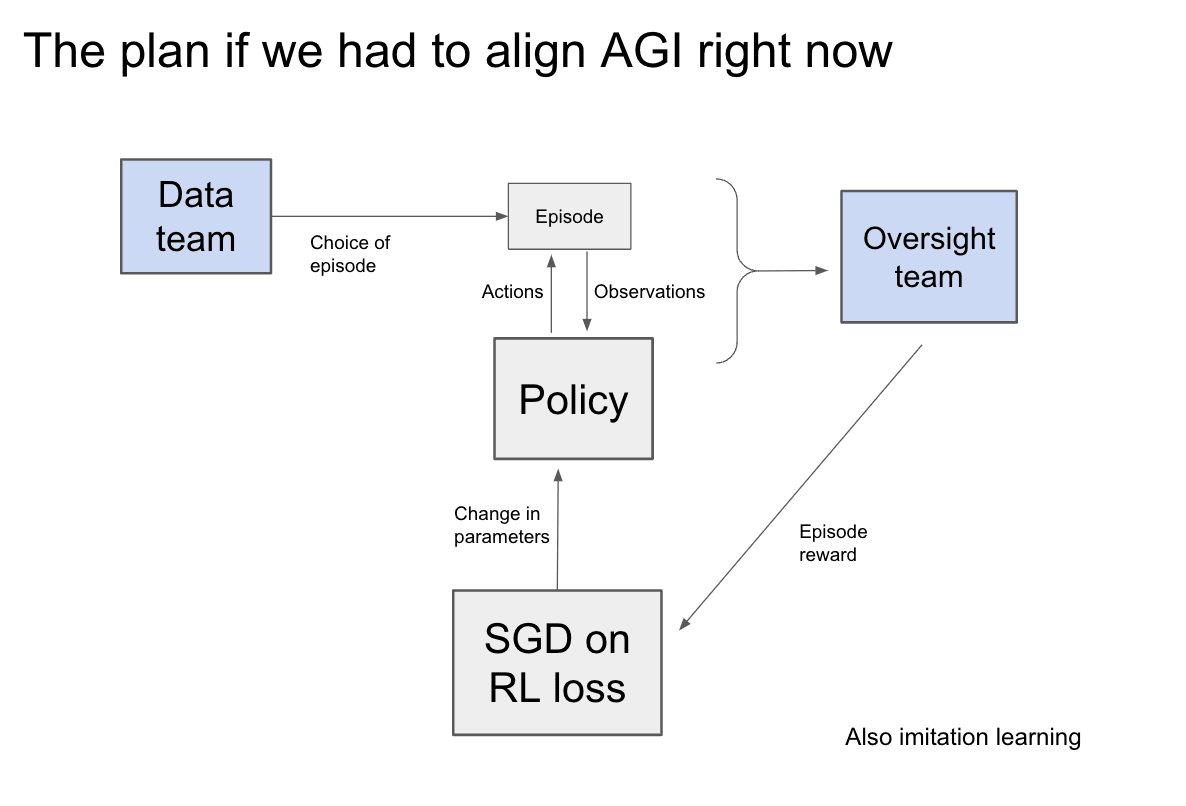

Here’s the companion video: Here’s the GitHub repo with data and code: Here’s the writeup: Recursive Self Referential Reasoning This experiment is meant to demonstrate the concept of “recursive, self-referential reasoning” whereby a Large Language Model (LLM) is given an “agent model” (a natural language defined identity) and its thought process is evaluated in a long-term simulation environment. Here is an example of an agent model. This one tests the Core Objective Function

Generative pre-trained transformer - Wikipedia

The Substrata for the AGI Landscape

Auto-GPT: Unleashing the power of autonomous AI agents

Vael Gates: Risks from Advanced AI (June 2022) — EA Forum

AI is centralizing by default; let's not make it worse — EA Forum

AgentVerse: Facilitating Multi-Agent Collaboration and Exploring Emergent Behaviors in Agents – arXiv Vanity

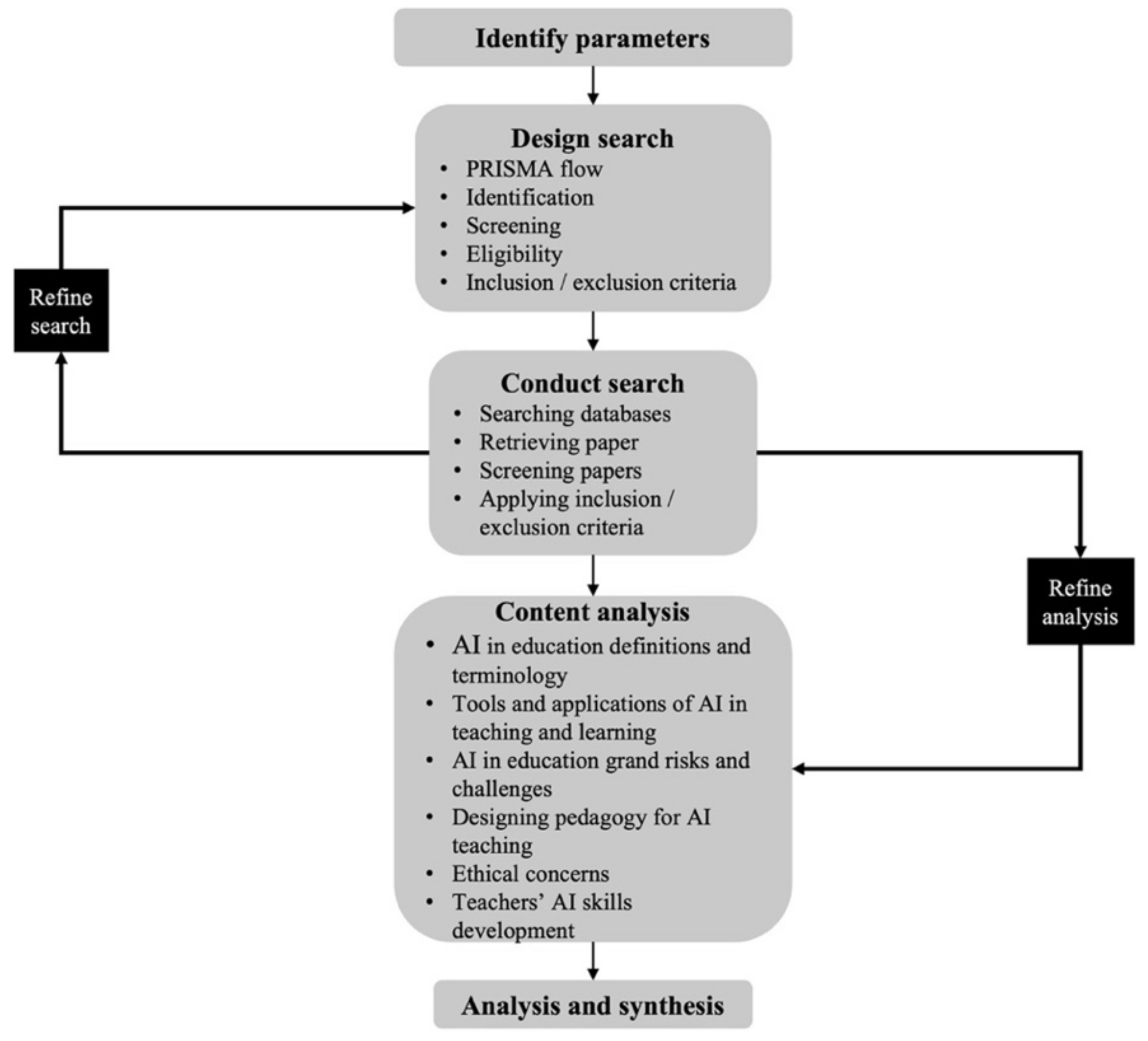

Information, Free Full-Text

GitHub - uncbiag/Awesome-Foundation-Models: A curated list of foundation models for vision and language tasks

My understanding of) What Everyone in Technical Alignment is Doing and Why — AI Alignment Forum

A High-level Overview of Large Language Models - Borealis AI

![osu!std] [Neox] Replay Stealing : r/osureport](https://external-preview.redd.it/VjZCplzmn4FU-ni-q5FTel4d2zZ5i58nUyVN5xO-qug.gif?format=png8&s=ae1b8040da636daf382d2323376c91366c94e6d1)

:quality(70)/cloudfront-eu-central-1.images.arcpublishing.com/irishtimes/7QDIMNQRNPGATED6SYH63XTMT4.jpg)

![Dessa vez tudo conspira ao favor de Hunter X Hunter! Togashi voltando de verdade? Hã? [Mangá] — Portallos](https://www.portallos.com.br/wp-content/uploads/2011/05/460173.jpg)